How to Build a B2C Database That Actually Produces Sales

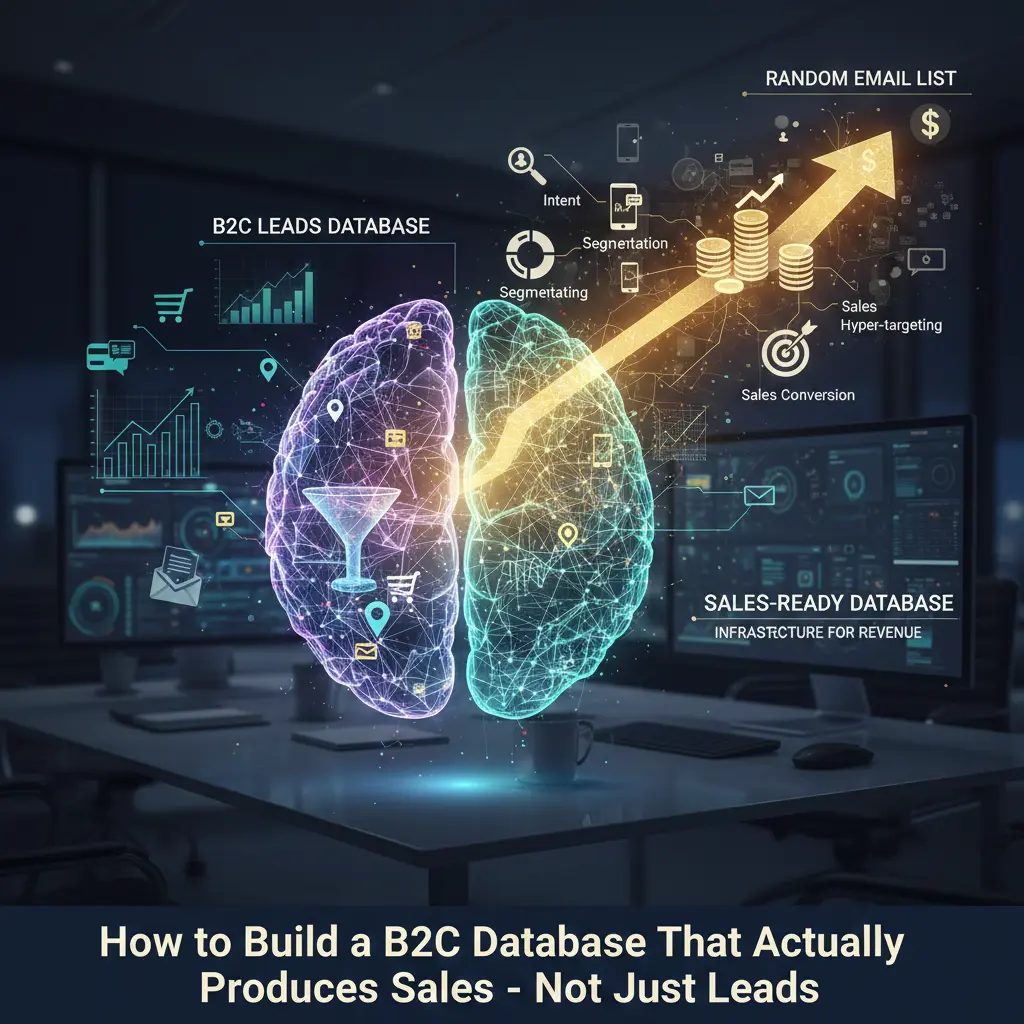

Most companies proudly claim they own a B2C database. But when you dig deeper, what they really have is a forgotten spreadsheet filled with bounced emails, outdated phone numbers, and zero insight into who is ready to buy. A real B2C database is not storage, it is infrastructure for revenue. It identifies who your buyers are, what they want, where they are in the buying journey, and how to turn attention into action. In this guide, you’ll learn how modern brands build profitable b2c contact databases, b2c leads databases, b2c email databases, and b2c marketing databases that generate predictable consumer sales, not random clicks. Why 90% of B2C Databases Fail to Generate Revenue Most B2C databases collapse because they are built for collection, not conversion. Here’s what that looks like in real life: After a few months, marketers stop trusting email, complain about low engagement, and shift budget to paid ads. The problem was never the channel – it was the quality of the data. What Makes a B2C Contact Database “Sales-Ready” in 2025 A modern b2c contact database must go far beyond basic contact fields. A sales-ready database includes: Field Why It Converts Verified email Prevents bounce & spam flags Mobile number Unlocks SMS & WhatsApp sales Interest category Enables hyper-targeting Location & city Geo-based offers Engagement score Identifies hot buyers Last activity date Filters dead leads Purchase intent signal Predicts conversion likelihood Your database must answer this question instantly: Who is ready to buy today? The Real Difference Between a B2C Leads Database and Random Email Lists A b2c leads database is not just a list – it is a decision engine. Leads Database Random Email List Tracks intent No behaviour data Updates dynamically Static file Segmented automatically Manual sorting High deliverability High spam risk Built for sales Built for volume Leads databases focus on buyer behaviour, not vanity metrics. Understanding B2C Data Lists and Their Role in Consumer Targeting B2C data lists are curated consumer segments such as: But raw lists don’t sell. Smart brands enrich them with: This transforms ordinary lists into targeting weapons. Building a High-Converting B2C Email Database Your b2c email database is your only channel you truly own. Step 1 – Capture Intent, Not Curiosity Use lead magnets tied to buying behaviour, not freebies. Step 2 – Verify at Entry Never store unverified emails – it protects your sender reputation. Step 3 – Behaviour Tagging Track every click, scroll, and visit. Step 4 – Segment Automatically Group users into: Ready Buyers, Researchers, Deal Hunters, Repeat Customers. Why a B2C Marketing Database Is the Heart of Revenue Automation A true b2c marketing database integrates: This creates a closed loop from interest → action → loyalty. How Scraping Solution Builds Sales-Ready B2C Databases At Scraping Solution, we don’t sell spreadsheets. We engineer consumer acquisition systems: ✔ Real-time scraping of public consumer data✔ AI-driven intent classification✔ Email & phone verification pipelines✔ Geo-segmented contact enrichment✔ CRM-ready data delivery This ensures every b2c database is built for profit, not clutter. Advanced Segmentation Models That Multiply B2C Sales Once your b2c contact database is clean and verified, the next growth lever is segmentation intelligence. Forget age and gender. High-ROI brands segment using commercial signals. 1. Behavioural Segmentation Tracks what users do, not what they say. Signal Sales Insight Product views Interest strength Add-to-cart Buying momentum Email clicks Engagement level Time on site Readiness 2. Transactional Segmentation Your b2c marketing database should isolate: This allows precision offers like loyalty discounts, upsells, and reactivation flows. 3. Geo-Intent Segmentation Location matters more than marketers realise. Consumers behave differently in: Your b2c data lists should be geo-tagged for contextual offers. How to Turn a Cold B2C Database into a Revenue Engine Even if your database is old, it’s not useless. Step 1 – Database Resurrection Audit Remove: Step 2 – Re-Engagement Campaign Send intent-focused content such as: Step 3 – Behaviour Re-Tagging Every click rebuilds intelligence. Step 4 – Micro-Offer Funnels Create low-barrier purchases to warm cold leads. B2C Database Monetisation Blueprint Here’s how profitable brands monetise their b2c leads database: Funnel Stage Database Action Awareness Educational drip campaigns Consideration Comparison emails Decision Discount or trial Retention Loyalty automation Upsell Product bundling This turns your database into a compounding revenue asset. The Compliance Framework That Protects Your B2C Marketing Database Compliance is not a checkbox, it is your deliverability shield. Your database must respect: Safe Practices: Store proof of consent Clean compliance = inbox trust. Common B2C Database Killers Mistake Impact Buying bulk unverified lists Spam blacklisting No segmentation Low conversions Over-emailing Unsubscribes No automation Manual chaos FAQs Ready to Turn Your B2C Database Into a Sales Machine? Your competitors are collecting data. You should be building revenue systems. At Scraping Solution, we don’t deliver messy spreadsheets, we engineer sales-ready B2C databases that plug directly into your marketing funnel and start producing results. What You Get ✔ Real-time consumer data scraping✔ Verified B2C contact database with clean emails & phone numbers✔ Intent-filtered B2C leads database — only buyers, no browsers✔ Fully segmented B2C email database for personalized campaigns✔ Geo-targeted B2C data lists for local & niche offers✔ CRM-ready B2C marketing database delivered in your preferred format Who This Is For Book Your Free B2C Database Strategy Call Let’s build a custom B2C database system designed around your product, your market, and your revenue goals. 👉 Click below to book your free consultation and start converting data into customers.